| No. | Domain | Total Question | Correct | Incorrect | Unattempted | Marked for Review |

|---|---|---|---|---|---|---|

| 1 | Develop Azure compute solutions | 14 | 0 | 0 | 14 | 0 |

| 2 | Develop for Azure storage | 13 | 0 | 0 | 13 | 0 |

| 3 | Implement Azure security | 6 | 0 | 0 | 6 | 0 |

| 4 | Monitor, troubleshoot, and optimize Azure solutions | 7 | 0 | 0 | 7 | 0 |

| 5 | Connect to and consume Azure services and third-party services | 15 | 0 | 0 | 15 | 0 |

| Total | All Domains | 55 | 0 | 0 | 55 | 0 |

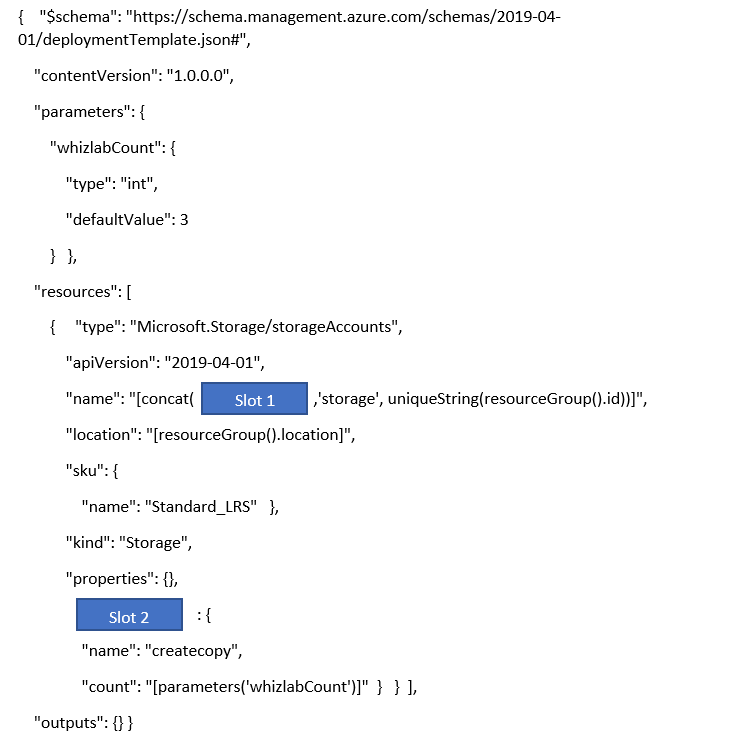

You have to deploy a set of storage accounts. You have to complete the below ARM template to deploy 3 storage accounts.

Which of the following would go into Slot 1?

- A. copy

- B. copyIndex()

- C. storageindex()

- D. storage

Explanation:

Answer – B

Here we have to use the copy element to create multiple instances of a resource. We can reference each index value with the help of the copyIndex() function.

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating multiple resources via ARM templates, please refer to the following URL

A Weather Forecasting Agency provides its customers detailed weather forecast for the next 7 days through its website. There has been a new plan to show a user-specific summarized view of past and future weather data. The website would internally connect to a web API backend to fetch forecast results.

As a developer of the application, you have been asked to create the backend API within API management and protect it using OAuth 2.0 with Azure Active Directory. Below are the tasks you have identified to implement:

Drag the correct options from the Actions area and drop them to the answer area in the correct order.

- Correct Answer

- 1.

Register the backend API application in Azure AD

- 2.

Register the web App (website containing summarized results) in Azure AD so that it can call the backend API

- 3.

Allow permissions between the website app to call the backend API app. Add the configurations in Azure AD

- 4.

Enable OAuth 2.0 user authorization and add the validate-jwt policy to validate the OAuth token for API call

Explanation:

Correct Answer:

|

1 |

Register the backend API application in Azure AD. |

|

2 |

Register the web App (website containing summarized results) in Azure AD so that it can call the backend API. |

|

3 |

Allow permissions between the website app to call the backend API app. Add the configurations in Azure AD. |

|

4 |

Enable OAuth 2.0 user authorization and add the validate-jwt policy to validate the OAuth token for API calls. |

Conceptually, below are the implementation steps:

- Register the backend API App and frontend Web App in Azure AD.

- In Azure Active Directory, allow the frontend Web App to connect to the backend API.

- Enable OAuth 2.0 into the API Management instance and then configure a JWT validation policy that will validate the incoming OAuth token.

Reference:

You can refer to the Microsoft documentation for more details on various ways for protecting an API using the azure active directory:

As a developer at Whizlab travel agency, you would need to develop a web application. For development purposes, you decide to create a self-signed certificate and use it during the development phase.

You are using an Azure KeyVault and PowerShell to create and manage the certificate.

Select Two correct options required to create a certificate in Azure KeyVault using PowerShell.

- A. $certificatepolicy = New-AzKeyVaultCertificatePolicy -SecretContentType "application/x-pkcs12" -SubjectName "CN=whizlab.com" -IssuerName "Self" -ValidityInMonths 6 –ReuseKeyOnRenewal

- B. $certificatepolicy = Set-AzureRmKeyVaultAccessPolicy -SecretContentType "application/x-pkcs12" -SubjectName "CN=whizlab.com" -IssuerName "Self" -ValidityInMonths 6 –ReuseKeyOnRenewal

- C. Add-AzureKeyVaultCertificate -VaultName " WhizlabkeyVault " -Name " WhizlabDevCert1" -CertificatePolicy $certificatepolicy

- D. Add-AzKeyVaultCertificate -VaultName "WhizlabkeyVault" -Name "WhizlabDevCert1" -CertificatePolicy $certificatepolicy

Explanation:

Answer: A and D

While creating a new certificate in the azure Key Vault, first the command that creates a Certificate Policy is required. It specifies the subject name, issuer name, validity and other details. It can be created using the azure PowerShell module New-AzKeyVaultCertificatePolicy

Then we can add the certificate to the KeyVault using Add-AzKeyVaultCertificate command and specifying the KeyVault name, certificate policy and the name of the certificate that is being created.

Options B and C are incorrect as the syntax provided is not the recent PowerShell modules for Azure Key Vault.

Reference:

There are other options including retrieving certificates that be performed using PowerShell, they are available at

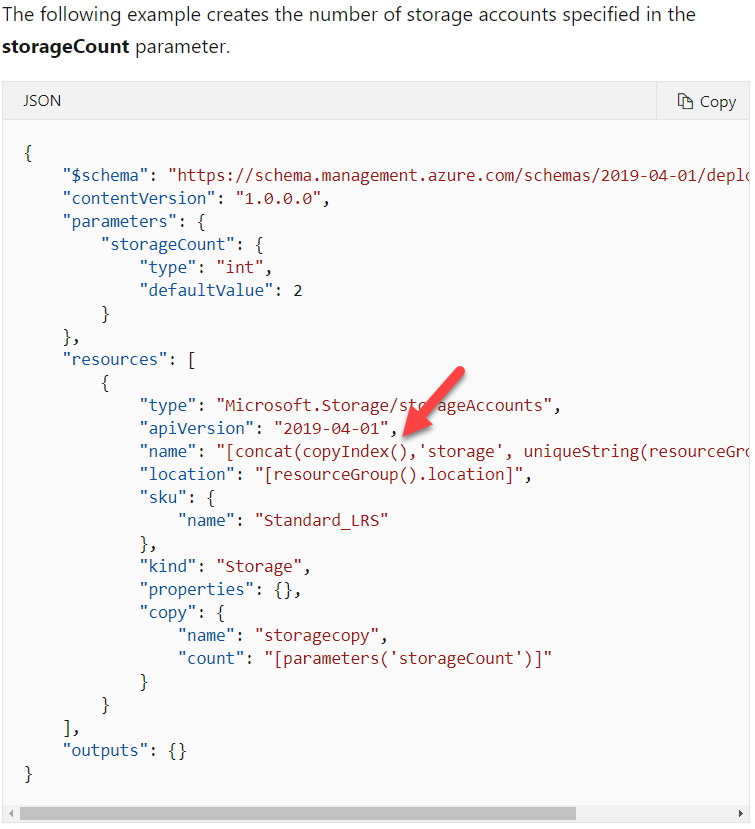

You are developing an Azure Function. This function will be using the Azure Blob storage trigger. You have to ensure the Function is triggered whenever .png files are added to a container named data.

You decide to add the following filter in the function.json file

"path": "data/png"

Does this filter criteria meet the requirement?

- A. Yes

- B. No

Explanation:

Answer – B

This is the wrong structure of the filter that needs to be used for this scenario

The right filter structure is as follows

"path": "data/{name}.png"

For more information on the blob trigger, please refer to the following URL

You are developing an Azure Function. This function will be using the Azure Blob storage trigger. You have to ensure the Function is triggered whenever .png files are added to a container named data.

You decide to add the following filter in the function.json file

"path": "*.png"

Does this filter criteria meet the requirement?

- A. Yes

- B. No

Explanation:

Answer – B

This is the wrong structure of the filter that needs to be used for this scenario

The right filter structure is as follows

"path": "data/{name}.png"

For more information on the blob trigger, please refer to the following URL

You are developing an Azure Function. This function will be using the Azure Blob storage trigger. You have to ensure the Function is triggered whenever .png files are added to a container named data.

You decide to add the following filter in the function.json file

"path": "data/{name}.png"

- A. Yes

- B. No

Explanation:

Answer - A

Yes, this is the right approach to creating the filter.

An example of this is also given in the Microsoft documentation

For more information on the blob trigger, please refer to the following URL

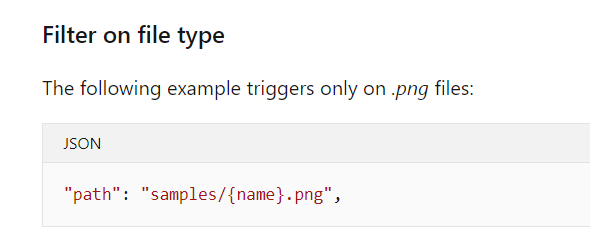

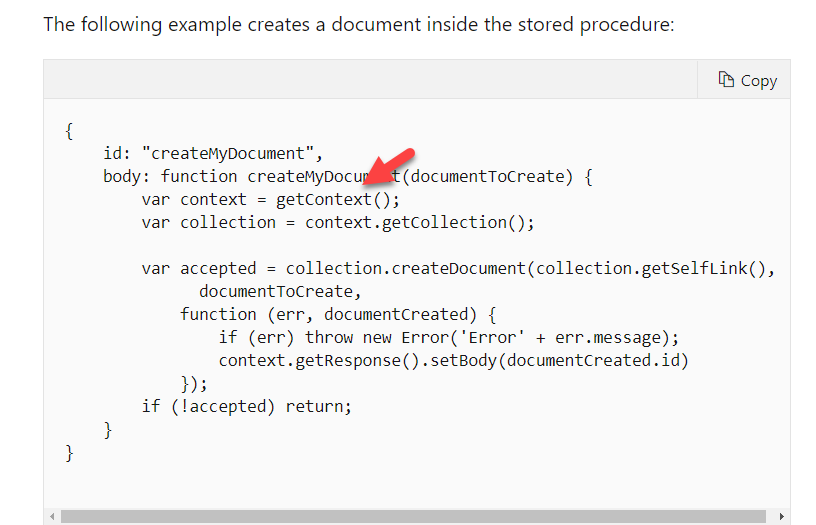

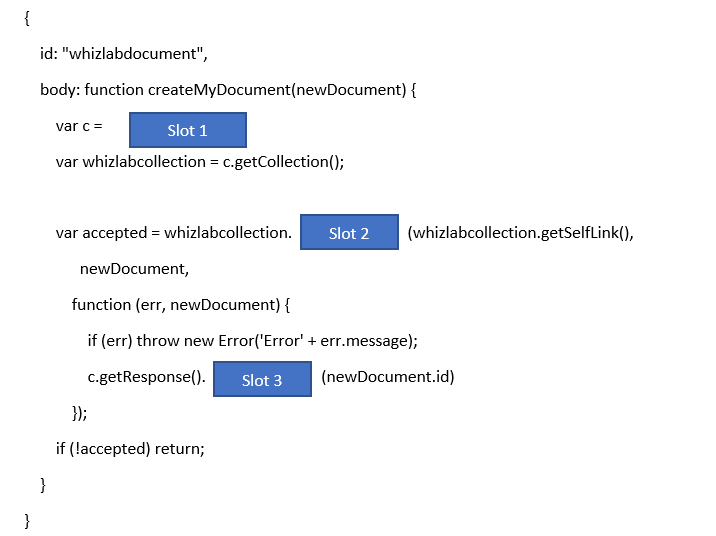

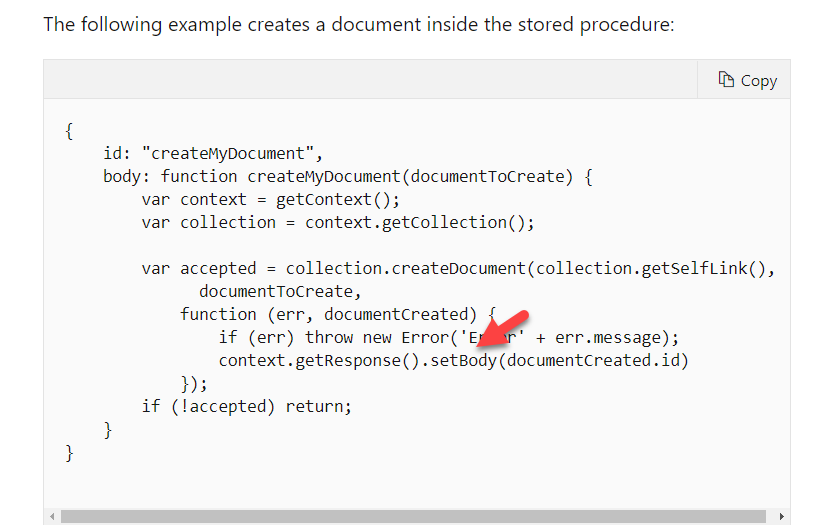

Your team has an Azure Cosmos DB Account of the type SQL API. You have to develop a simple stored procedure that would perform the activity of adding a document to a Cosmos DB container.

You have to complete the below code snippet for this requirement

Which of the following would go into Slot 1?

- A. getBody

- B. setBody

- C. getDocument();

- D. getContext();

- E. createDocument

- F. newDocument

Explanation:

Answer – D

The first step is to get the current context of the request.

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on using Cosmos DB stored procedures, please refer to the following URL

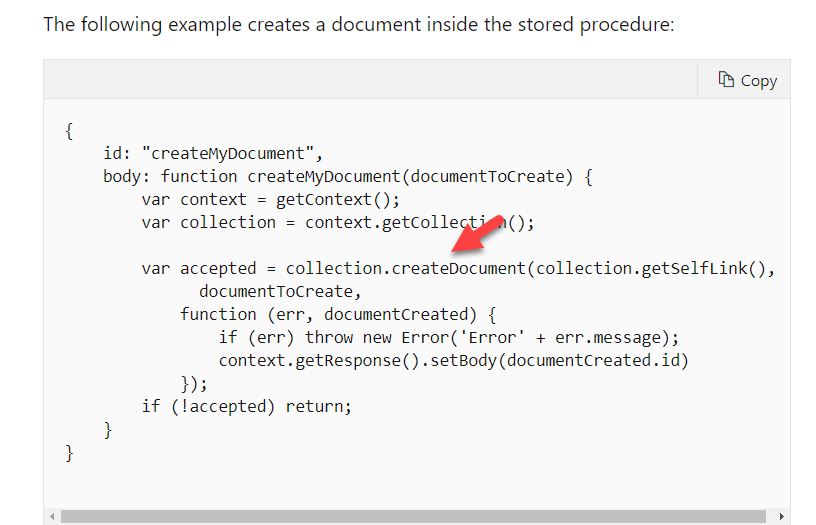

Your team has an Azure Cosmos DB Account of the type SQL API. You have to develop a simple stored procedure that would perform the activity of adding a document to a Cosmos DB container.

You have to complete the below code snippet for this requirement

Which of the following would go into Slot 2?

- A. getBody

- B. setBody

- C. getDocument();

- D. getContext();

- E. createDocument

- F. newDocument

Explanation:

Answer – E

Here we have to use the createDocument method to create a new Cosmos DB document

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on using Cosmos DB stored procedures, please refer to the following URL

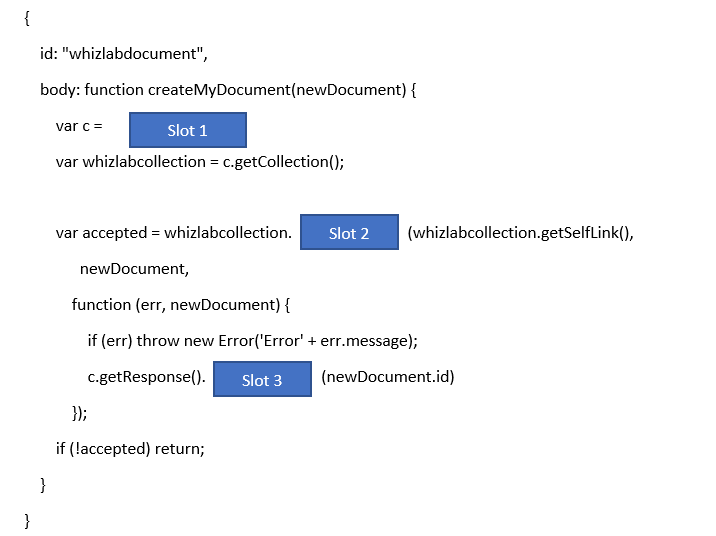

Your team has an Azure Cosmos DB Account of the type SQL API. You have to develop a simple stored procedure that would perform the activity of adding a document to a Cosmos DB container.

You have to complete the below code snippet for this requirement

Which of the following would go into Slot 3?

- A. getBody

- B. setBody

- C. getDocument();

- D. getContext();

- E. createDocument

- F. newDocument

Explanation:

Answer – B

Here we have to set the body of the response with the new document ID.

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on using Cosmos DB stored procedures, please refer to the following URL

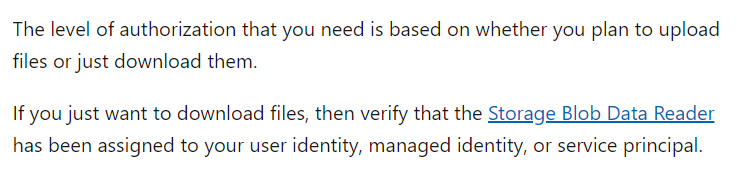

Your team has an Azure storage account. You have to use the AzCopy tool to download a set of blobs from a container. Which of the following is the least privileged role that needs to be assigned to the storage account for this requirement?

- A. Storage Blob Data Reader

- B. Storage Blob Data Contributor

- C. Reader

- D. Contributor

Explanation:

Answer – A

If you just need to download the blobs, you just need to have the Storage Blob Data Reader role.

This is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on working with the AzCopy tool, please refer to the following URL

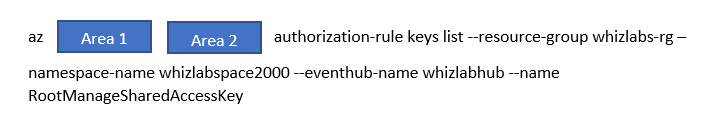

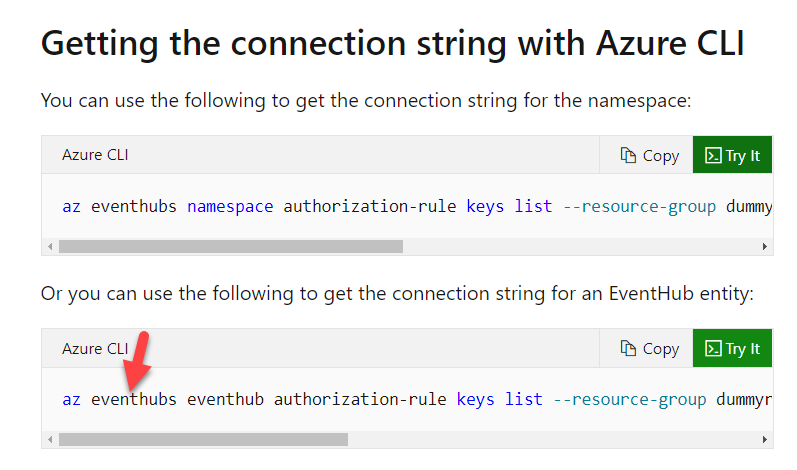

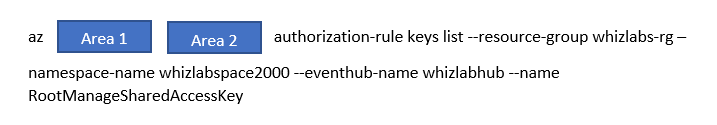

You have to develop a solution that would make use of Azure Event Hubs. You have to get the connection string from an existing Azure Event Hub to use within the program

You have to issue an Azure CLI statement to fetch the required connection string

Which of the following would go into Area 1?

- A. get

- B. eventhubs

- C. eventhub

- D. connection-string

Explanation:

Answer – B

Here we have to use the eventhubs keyword.

This is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on working with Event Hubs connection string, please refer to the following URL

You have to develop a solution that would make use of Azure Event Hubs. You have to get the connection string from an existing Azure Event Hub to use within the program

You have to issue an Azure CLI statement to fetch the required connection string

Which of the following would go into Area 2?

- A. get

- B. eventhubs

- C. eventhub

- D. connection-string

Explanation:

Answer – C

Here we have to use the eventhub keyword.

This is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on working with Event Hubs connection string, please refer to the following URL

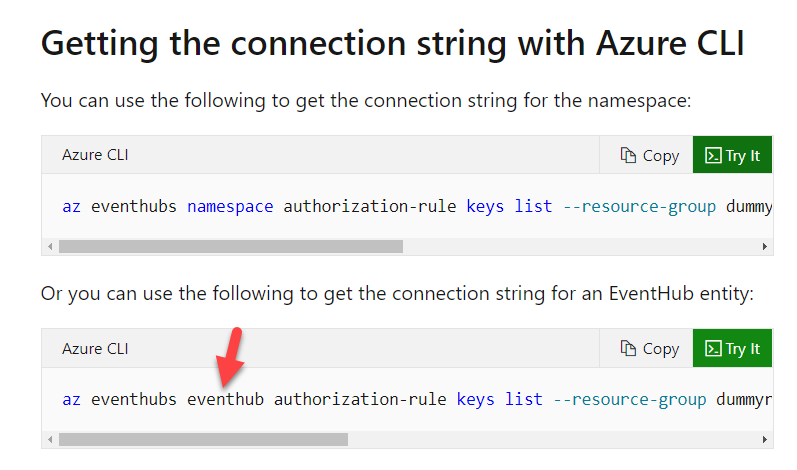

You have an Azure Key vault in place. You have a secret defined in the Azure Key vault. You have several versions available of the secret. You have to issue a REST API call to get a specific revision of the secret from the key vault. Which of the following would you issue in the REST API call to get the specific secret version?

- A. A session variable

- B. A query string parameter

- C. An Environment variable

- D. A POST parameter

Explanation:

Answer – B

When you want to get a specific revision number of the secret, you have to use query string parameters.

This is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on getting secret versions, please refer to the following URL

https://learn.microsoft.com/en-us/rest/api/keyvault/secrets/get-secret-versions

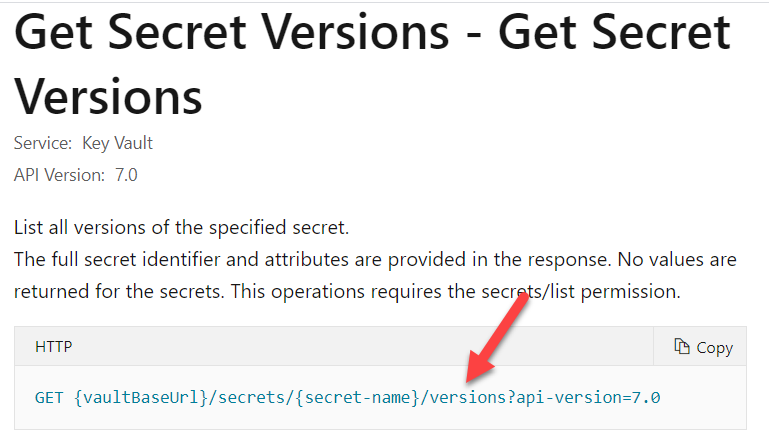

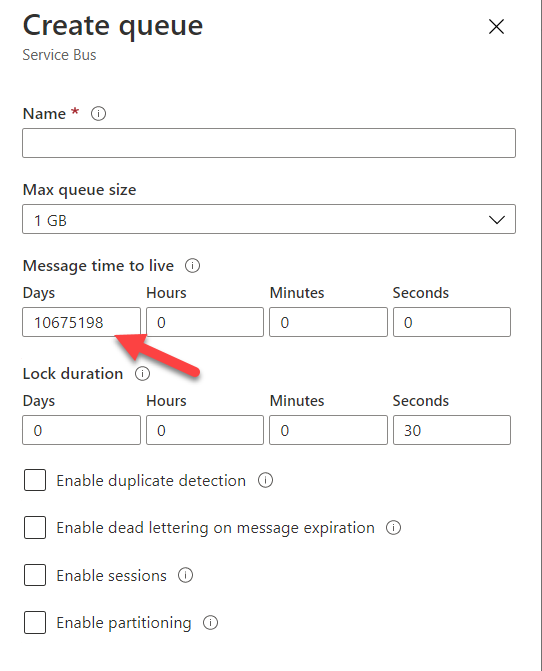

You have to develop an application that is going to send messages to an Azure Service Bus queue. You have to develop a module that will ensure messages sent to the queue are only moved to the Active state after a certain period of time. Which of the following would you implement to fulfil this requirement?

- A. Set the Lock property for the queue

- B. Set the timeout property for the queue messages

- C. Set the ScheduledEnqueueTimeUtc property for the messages

- D. Set the SequenceNumber property for the messages

Explanation:

Answer – C

Here we need to implement scheduling of messages. This will ensure messages are sent after a certain duration of time.

The Microsoft documentation mentions the following

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on scheduling of messages, please refer to the following URL

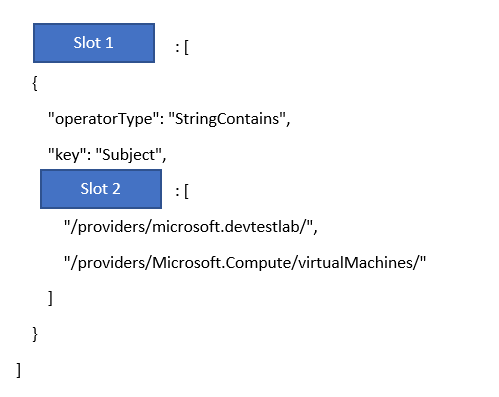

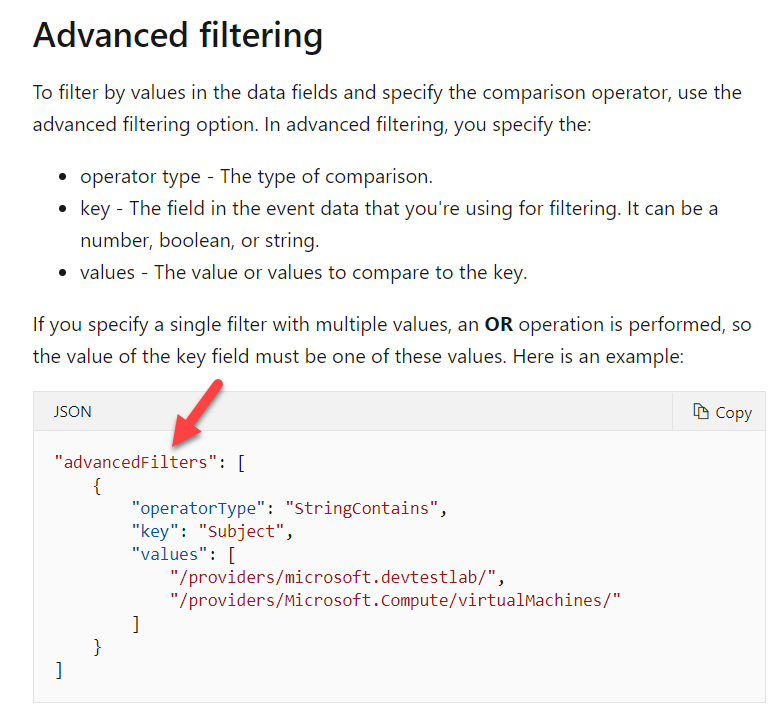

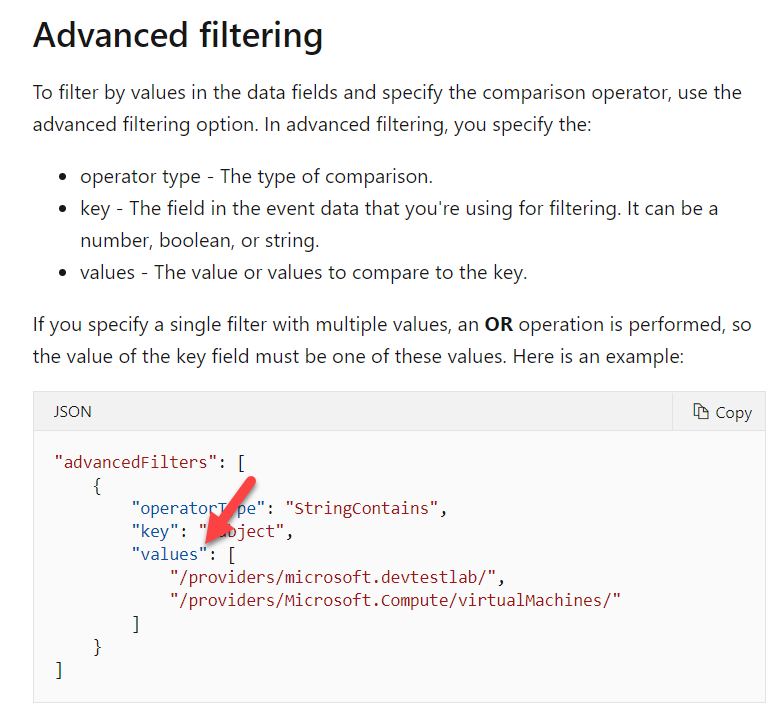

You have to develop an application that is going to listen to events emitted by the Event Grid service. You want to ensure that your application only receives events from the virtual machine service or the devtestlab service. You have to configure the filters accordingly

Which of the following would go into Slot 1?

- A. “filter”

- B. “service”

- C. “advancedFilters”

- D. “values”

Explanation:

Answer – C

For this requirement, we have to set Advanced filters.

An example of this is given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on event grid filtering, please refer to the following URL

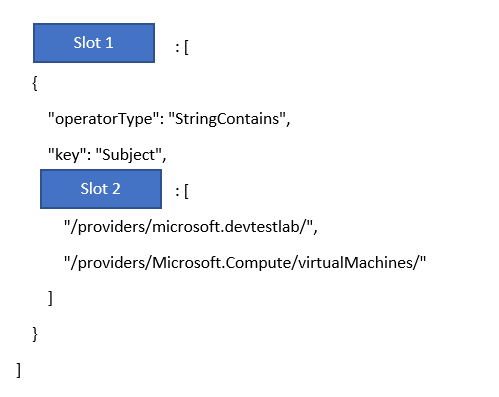

You have to develop an application that is going to listen to events emitted by the Event Grid service. You want to ensure that your application only receives events from the virtual machine service or the devtestlab service. You have to configure the filters accordingly

Which of the following would go into Slot 2?

- A. “filter”

- B. “service”

- C. “advancedFilters”

- D. “values”

Explanation:

Answer – D

For this requirement, we have to set Advanced filters and filter based on the values

An example of this is given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on event grid filtering, please refer to the following URL

You have to develop a workflow in the Azure Logic App service to perform the following actions

- Trigger the workflow whenever a deallocation activity occurs for a virtual machine

- Send an email to the IT Administrator with details of the activity

Which of the following service would you integrate with your workflow to trigger it?

- A. Azure Event Hubs

- B. Azure Event Grid

- C. Azure Service Bus

- D. Azure Functions

Explanation:

Answer – B

You would use the Azure Event Grid service to listen to events emitted from the resource group that contains the virtual machines.

This is also given in the Microsoft documentation as an example

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on the actual use case scenario example, please refer to the following URL

You have to develop an Azure Function with a runtime which is currently not supported by Azure Function by default. You decide to use Azure Functions custom handlers. You have to define the correct application settings in the local.setting.json file.

{

"IsEncrypted": false,

"Values": {

Area 1

}

}

Which of the following would go into Area 1?

- A. "FUNCTIONS_RUNTIME": "Custom"

- B. "WORKER_RUNTIME": "Custom"

- C. "FUNCTIONS_WORKER_RUNTIME": "Custom"

- D. "AZURE_FUNCTIONS_WORKER_RUNTIME": "Custom"

Explanation:

Correct Answer: C

- Option C is CORRECT because "FUNCTIONS_WORKER_RUNTIME": "Custom" is required to define in the local.setting.json file.

- Option A is incorrect because "FUNCTIONS_RUNTIME": "Custom" is not the correct setup.

- Option B is incorrect because "WORKER_RUNTIME": "Custom" is not the correct setup.

- Option D is incorrect because "AZURE_FUNCTIONS_WORKER_RUNTIME": "Custom" is not the correct setup.

The State University of Maryland connects all printers and PCs using a Printer management software installed in an Ubuntu 18.04 LTS VM hosted in Azure. It has been noticed that during certain periods the printer workflow is slowing down and print requests are taking longer than usual time to process.

You have been asked to take a remote session of the VM and quickly troubleshoot the management software performance.

Drag the correct options from the Actions area and drop them to the answer area to take a remote session of the VM

- Correct Answer

Create an NSG rule to allow TCP on port 3389

Install and configure a desktop environment using xfce

Install remote desktop protocol using xrdp

Explanation:

Correct Answers:

|

Create an NSG rule to allow TCP on port 3389. |

|

Install and configure a desktop environment using xfce. |

|

Install remote desktop protocol using xrdp. |

- Option A is correct because to connect and take a remote desktop session, the RDP port 3389 should be enabled.

- Option B is incorrect because port 80 is the default port for HTTP connection. For taking a remote session, allowing this port is not required.

- Option C is correct because by default Ubuntu 18.04 LTS VM in Azure doesn't support a remote desktop environment. xfce is a lightweight desktop environment.

- Option D is incorrect because for initiating a remote desktop session the current SSH public key reset is not required.

- Option E is correct because Ubuntu 18.04 LTS VM in Azure doesn't support a remote desktop service. Hence, an open-source xrdp client can be installed to enable a remote desktop service.

Reference:

For more details on installing and configuring Remote Desktop to connect to a Linux VM in Azure, please check the link:

You are developing a set of Azure Functions. You have deployed a new Azure Function App. The Function App uses the underlying language runtime of .Net Core 3.1. You have to configure the logging level for the Azure Functions. In which of the following would you configure the logging details?

- A. function.json

- B. host.json

- C. app.json

- D. app.xml

Explanation:

Answer – B

You would configure logging in the host.json file.

This is also mentioned in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on monitoring Azure Functions, please refer to the following URL

You have to develop a solution that will make use of the Azure Service Bus service. The solution would make use of queues in an Azure Service Bus instance. The number of senders is large, and the number of receivers is less. Which of the following are best practices when it comes to maximizing the throughput for the queue? Choose 2 answers from the options given below

- A. If the sender resides in a different process, use multiple factories per process

- B. If the sender resides in a different process, use a single factory per process

- C. Leave batched store access enabled

- D. Leave batched store access disabled

Explanation:

Answer – B and C

There are different scenarios available in the Microsoft documentation when it comes to maximizing throughput for queues and topics.

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on best practices for Azure Service Bus, please refer to the following URL

You have to develop an ASP.Net web application. The web application would be deployed to an Azure Web App service. You must implement management of session information within the web application. The application should be able to save session state information and HTML output. Session state should be shared across all ASP.Net web applications. There should also be support for controlled and concurrent access to the same session state data for multiple readers and for a single writer.

You must decide on the right solution for storing the full HTTP responses.

You decide to enable Application Request Routing

Would this fulfil the requirement?

- A. Yes

- B. No

Explanation:

Answer – B

Here the ideal solution is to use Azure Cache for Redis. This solution can be used to store session state data.

For more information on using the session state provider for Azure Cache for Redis, please refer to the following URL

You have to develop an ASP.Net web application. The web application would be deployed to an Azure Web App service. You must implement management of session information within the web application. The application should be able to save session state information and HTML output. Session state should be shared across all ASP.Net web applications. There should also be support for controlled and concurrent access to the same session state data for multiple readers and for a single writer.

You must decide on the right solution for storing the full HTTP responses.

You decide to use an instance of Azure Cache for Redis

Would this fulfil the requirement?

- A. Yes

- B. No

Explanation:

Answer – A

Yes, this is the ideal solution for caching session state data.

For more information on using the session state provider for Azure Cache for Redis, please refer to the following URL

You have to develop an ASP.Net web application. The web application would be deployed to an Azure Web App service. You must implement management of session information within the web application. The application should be able to save session state information and HTML output. Session state should be shared across all ASP.Net web applications. There should also be support for controlled and concurrent access to the same session state data for multiple readers and for a single writer.

You must decide on the right solution for storing the full HTTP responses.

You decide to use an instance of Azure Event Hubs

Would this fulfil the requirement?

- A. Yes

- B. No

Explanation:

Answer – B

This service is a data ingestion service and not a caching service.

Here the ideal solution is to use Azure Cache for Redis. This solution can be used to store session state data.

For more information on using the session state provider for Azure Cache for Redis, please refer to the following URL

You have to develop an ASP.Net application. The application is an on-demand video streaming service. The application would be hosted on an Azure Web App service. To facilitate the delivery of content, you decide to use the Azure Content Delivery Network service.

An example of a URL which users would use for viewing a video is given below

All of the video content must expire from the cache after an hour. The videos of varying quality must be delivered from the closest regional point of presence.

You have to implement the right caching rules.

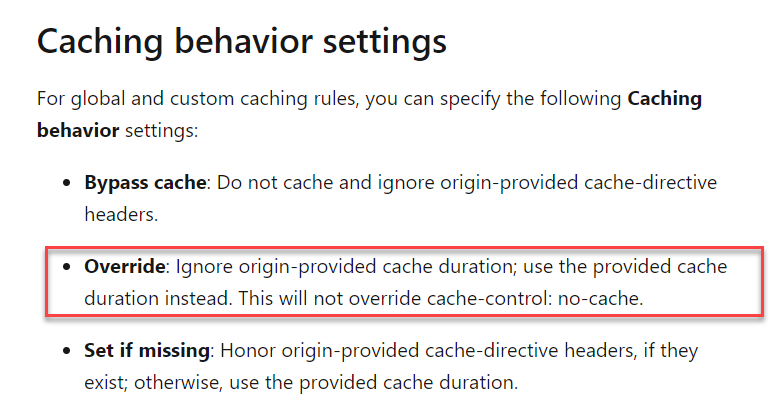

Which of the following would you choose for the caching behaviour?

- A. Bypass cache

- B. Default

- C. Override

- D. Set if missing

Explanation:

Answer – C

Here since you have to ensure that all content expires after an hour, you have to use the Override setting.

Since this setting is used to explicitly set the cache duration, all other settings are incorrect

For more information on Azure CDN cache settings, please refer to the following URL

You have to develop an ASP.Net application. The application is an on-demand video streaming service. The application would be hosted on an Azure Web App service. To facilitate the delivery of content, you decide to use the Azure Content Delivery Network service.

An example of a URL which users would use for viewing a video is given below

All of the video content must expire from the cache after an hour. The videos of varying quality must be delivered from the closest regional point of presence.

You have to implement the right caching rules.

Which of the following would you choose as the cache expiration duration?

- A. 1 second

- B. 1 minute

- C. 1 hour

- D. 1 day

Explanation:

Answer – C

Since the requirement mentions that the cache content needs to expire after an hour, we have to choose the setting of 1 hour.

Since the time frame is clearly mentioned in the requirement, all other options are incorrect.

For more information on Azure CDN cache settings, please refer to the following URL

The ‘Food For Us’ Grocery Outlet is launching an online grocery platform where customers can login and order groceries online.

To make the signup process easy and convenient, the team has decided to allow users to their preferred social media (Facebook/Gmail/Microsoft), or local account email account to get single sign-on access to the website.

What should be the identity management service required to achieve the objective?

- A. Azure Active Directory B2B

- B. Azure Multi-Factor Authentication

- C. Azure Active Directory B2C

- D. Single Tenant Azure AD Authentication

Explanation:

Answer: C

Option A is incorrect because the Azure active directory BSB would enable users from an external organization to collaborate with your organization. It won’t be useful in social media identity providers.

Option B is incorrect because enabling MFA only won’t be helpful in achieving the objective of allowing social media identity providers to authenticate users.

Option C is CORRECT because Azure Active Directory B2C offers a solution to authenticate users through external social media identity providers as well as other organizations. It will help us achieve the objective of this use case.

Option D is incorrect because won’t be helpful in achieving the objective of allowing social media identity providers to authenticate users.

Reference:

More details on Azure Active Directory B2C and its use cases are available in the following link:

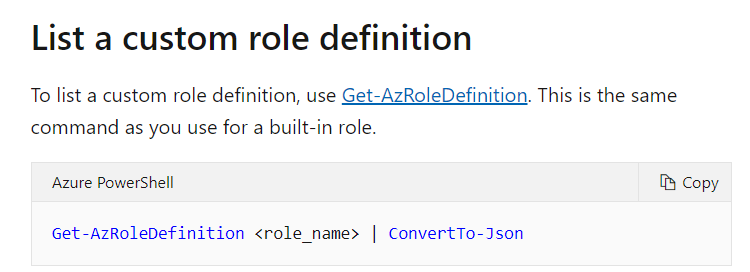

Your company has a set of resources in Azure. Your team would develop web applications and then send them across the build to the deployment team for subsequent deployment onto Azure. You have to ensure that your team has the ability to view resources in Azure. They should also be able to create support tickets for all subscriptions.

You must create a new custom role based on an existing role definition.

Which of the following would you use as the PowerShell command get to generate a JSON file to create a custom role?

- A. Get-AzRoleDefinition “Reader” | ConvertTo-Json

- B. Set-AzRoleDefinition “Reader” | ConvertTo-Json

- C. Get-AzRoleDefinition “Owner” | ConvertTo-Json

- D. Get-AzRoleDefinition “Reader” | ConvertTo-XML

Explanation:

Answer – A

Here we have to use the Get-AzRoleDefinition command.

An example of this is also given in the Microsoft documentation

Option B is incorrect since we have to use the Get-AzRoleDefinition command.

Option B is incorrect since we have to use the Get-AzRoleDefinition command.

Option C is incorrect since the Owner role would have excess permissions that are not required for this scenario

Option D is incorrect since we have to use the ConvertTo-Json option

For more information on creating custom roles, please refer to the following URL

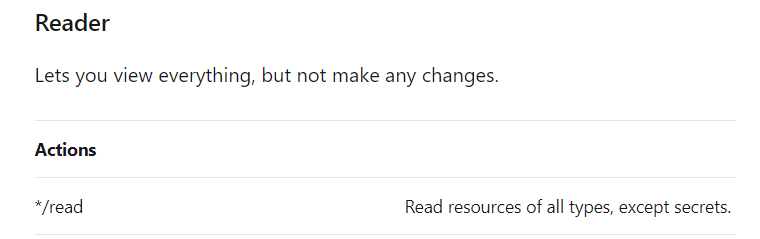

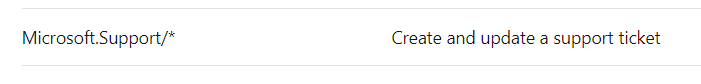

Your company has a set of resources in Azure. Your team would develop web applications and then send across the build to the deployment team for subsequent deployment onto Azure. You have to ensure that your team has the ability to view resources in Azure. They should also be able to create support tickets for all subscriptions.

You must create a new custom role based on an existing role definition.

Which of the following would you add to the Actions section for the custom role?

- A. “*/read”,”Microsoft.Support/*”

- B. “*/read”

- C. “*”

- D. “*”,”Microsoft.Support/*”

Explanation:

Answer – A

If you look at the Reader role in the documentation, here you can see that the Action of “*/read” evaluates to giving read permissions for the resources.

And if you look at a role that has support to raise a support ticket, you will see the following action

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on the built-in roles in Azure, please refer to the following URL

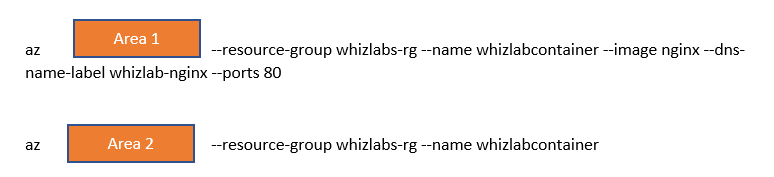

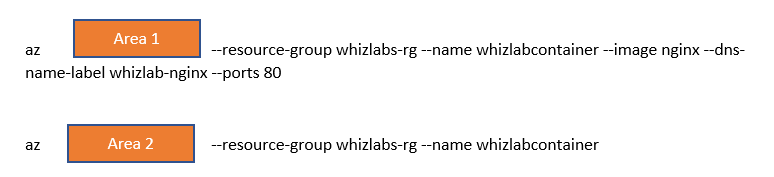

You have to create a new Azure container instance. The container instance would be used to host a nginx container.

You also have to view the container instance’s logs

You have to complete the below Azure CLI commands for this requirement

Which of the following would go into Area 1?

- A. instance create

- B. container create

- C. instance logs

- D. container logs

Explanation:

Answer - B

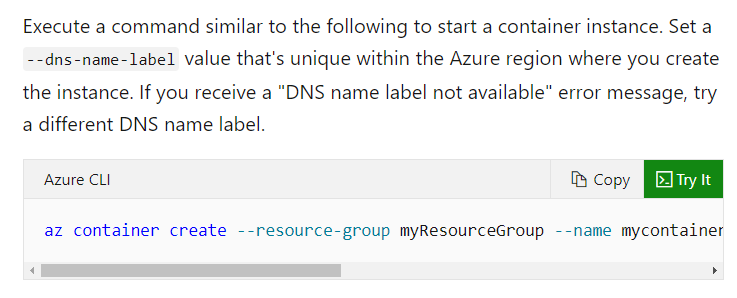

To create a new container instance, we have to use the az container create command

This is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on Azure container instances, please refer to the following URL

You have to create a new Azure container instance. The container instance would be used to host a nginx container.

You also have to view the container instance’s logs

You have to complete the below Azure CLI commands for this requirement

Which of the following would go into Area 2?

- A. instance create

- B. container create

- C. instance logs

- D. container logs

Explanation:

Answer - D

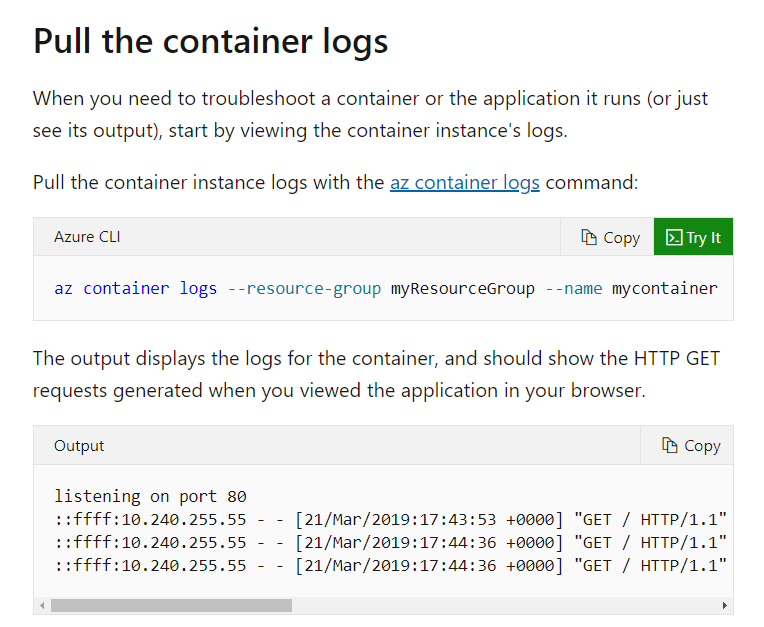

To pull the container logs, we have to use the az container logs command

This is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on Azure container instances, please refer to the following URL

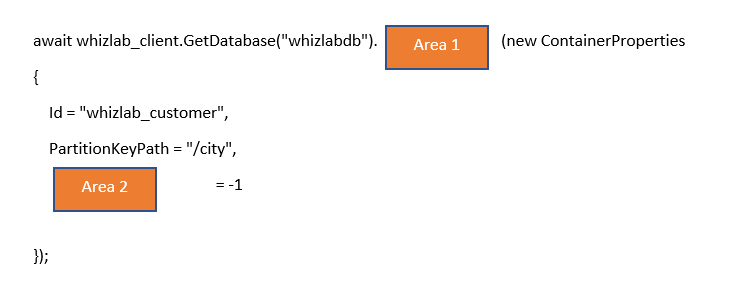

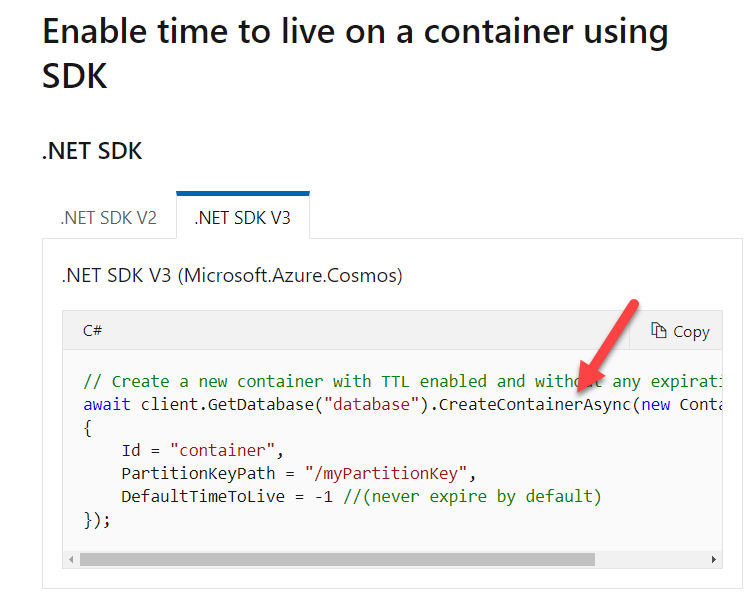

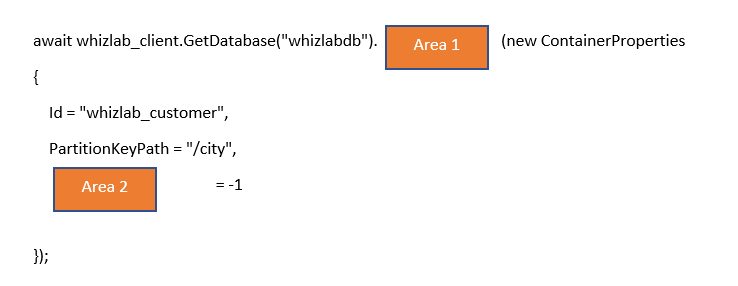

You have to develop an application that is going to work with Azure Cosmos DB. You have gone ahead and create an Azure Cosmos DB account of the SQL API.

You have to create a container using the .Net SDK.

You have to ensure that all items in the container have TTL enabled and does not have any expiration value

Which of the following would go into Area 1?

- A. GetContainerAsync

- B. SetContainerAsync

- C. NewContainerAsync

- D. CreateContainerAsync

Explanation:

Answer – D

Here we have to use the CreateContainerAsync class.

An example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on working with the time to live property for the items in Azure Cosmos DB, please refer to the following URL

You have to develop an application that is going to work with Azure Cosmos DB. You have gone ahead and create an Azure Cosmos DB account of the SQL API.

You have to create a container using the .Net SDK.

You have to ensure that all items in the container have TTL enabled and does not have any expiration value

Which of the following would go into Area 2?

- A. DefaultTimeToLive

- B. Value

- C. TTL

- D. Key

Explanation:

Answer – A

Here we have to set the property of DefaultTimeToLive

An example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on working with the time to live property for the items in Azure Cosmos DB, please refer to the following URL

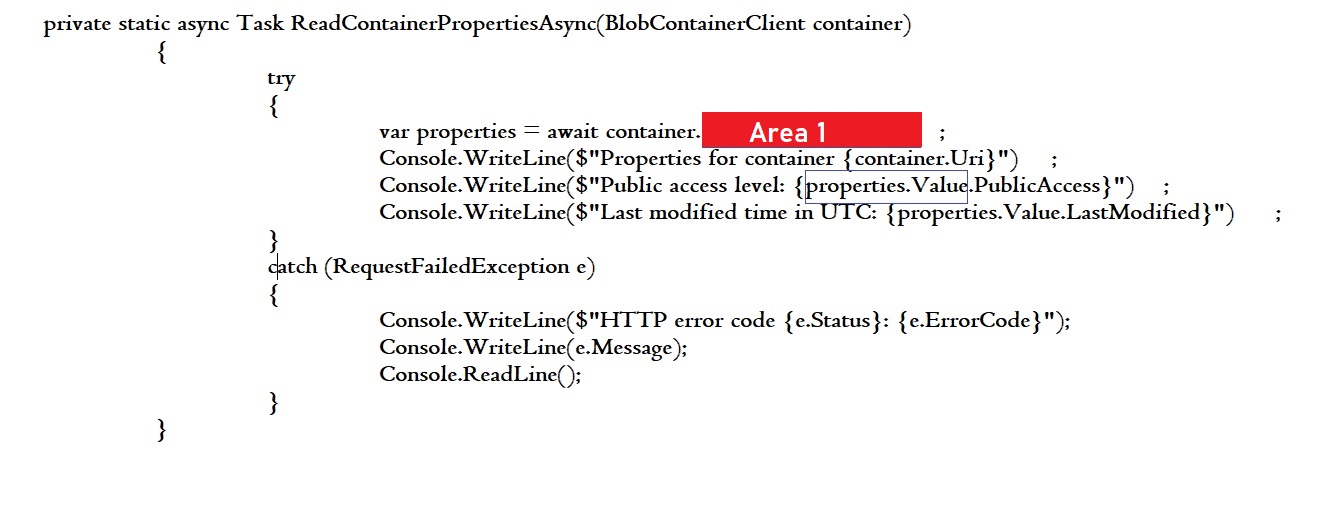

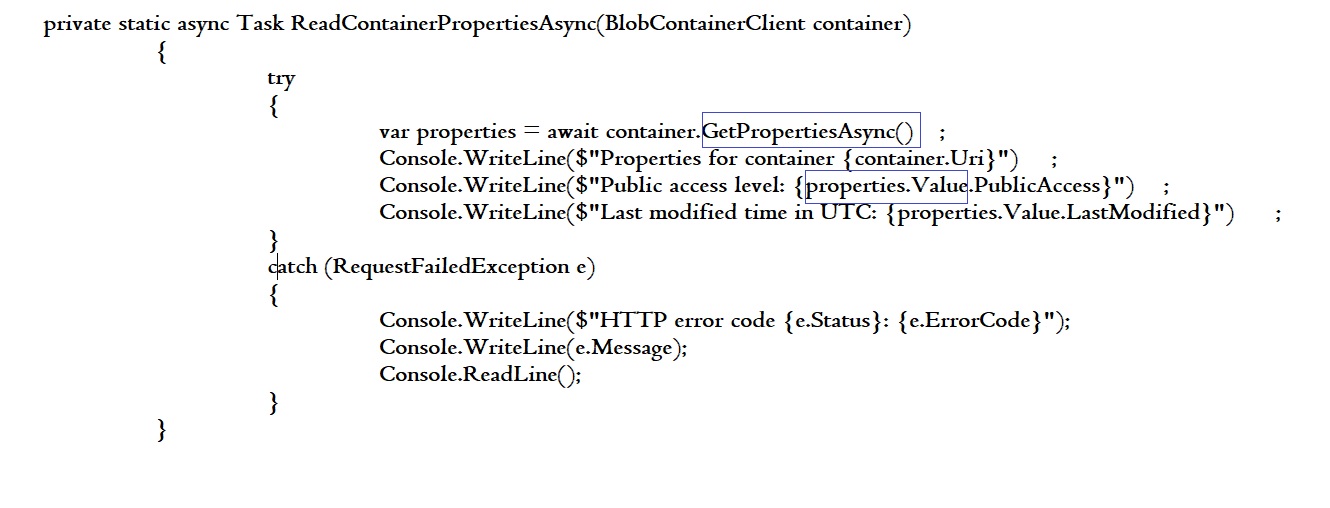

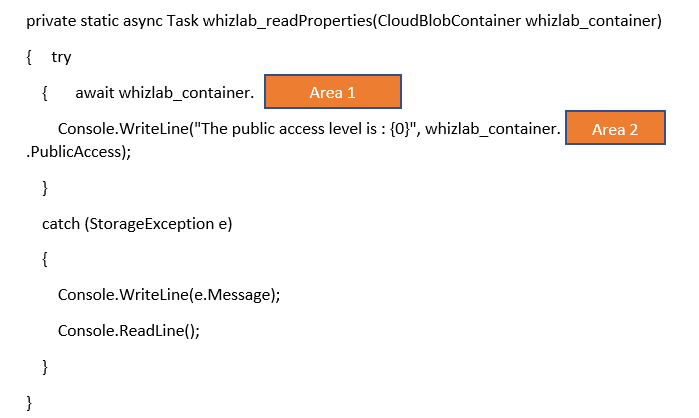

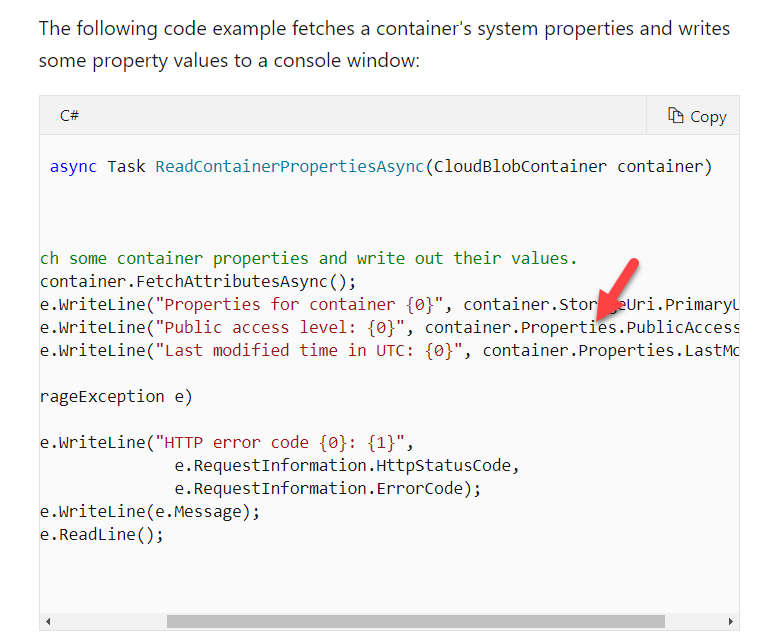

You have to develop an application that is going to work with Azure Blob storage.

Which of the following would go into Area 1?

- A. GetProperties();

- B. GetPropertiesAsync()

- C. GetMetadata

- D. GetData();

Explanation:

Answer – B

To get the properties of the container, we first have to use the GetPropertiesAsync(); method.

An example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on working with blob properties and metadata, please refer to the following URL

You have to develop an application that is going to work with Azure Blob storage.

Which of the following would go into Area 2?

- A. GetProperties

- B. Properties

- C. Fetch

- D. Metadata

Explanation:

Answer – B

Here we can use the Properties property to work with the container level properties

An example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on working with blob properties and metadata, please refer to the following URL

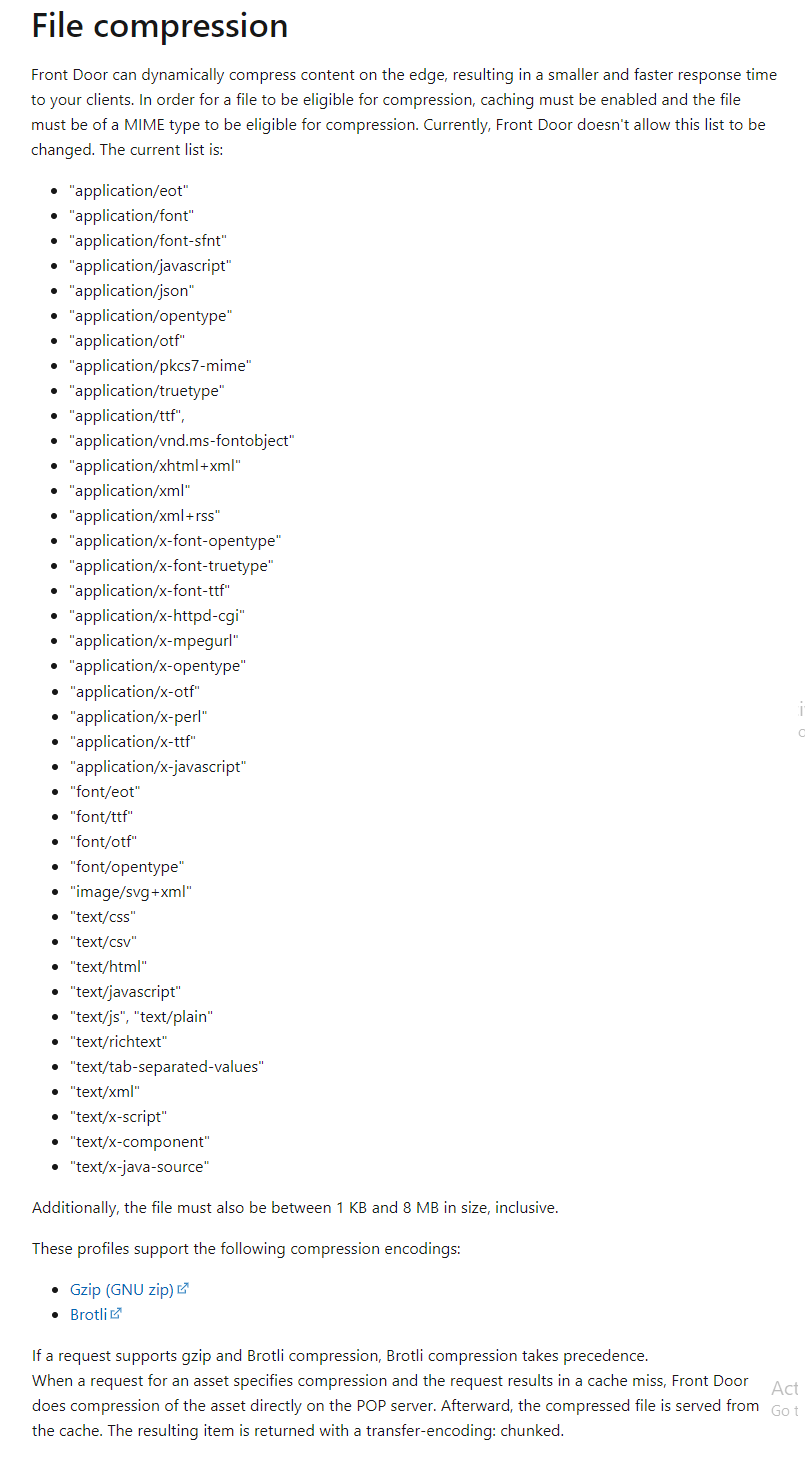

You have configured an Azure Front Door service. The Front Door service is routing requests to web applications configured in the Azure Web App service. A request is being made for a 9 MB XML file along with Brotli compression. But the file is not being compressed.

Does Azure Front Door support the MIME type?

- A. Yes

- B. No

Explanation:

Answer – A

If you look at the Microsoft documentation, when it comes to File compression for the Azure Front Door service, the XML MIME extension is supported.

For more information on Azure Front Door caching, please refer to the following URL

You have configured an Azure Front Door service. The Front Door service is routing requests to web applications configured in the Azure Web App service. A request is being made for a 9 MB XML file along with Brotli compression. But the file is not being compressed.

Does Azure Front Door support the compression type?

- A. Yes

- B. No

Explanation:

Answer – A

If you look at the Microsoft documentation, when it comes to File compression for the Azure Front Door service, the compression type is supported.

For more information on Azure Front Door caching, please refer to the following URL

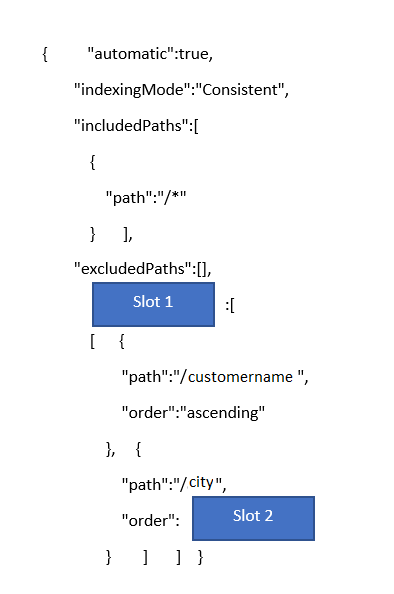

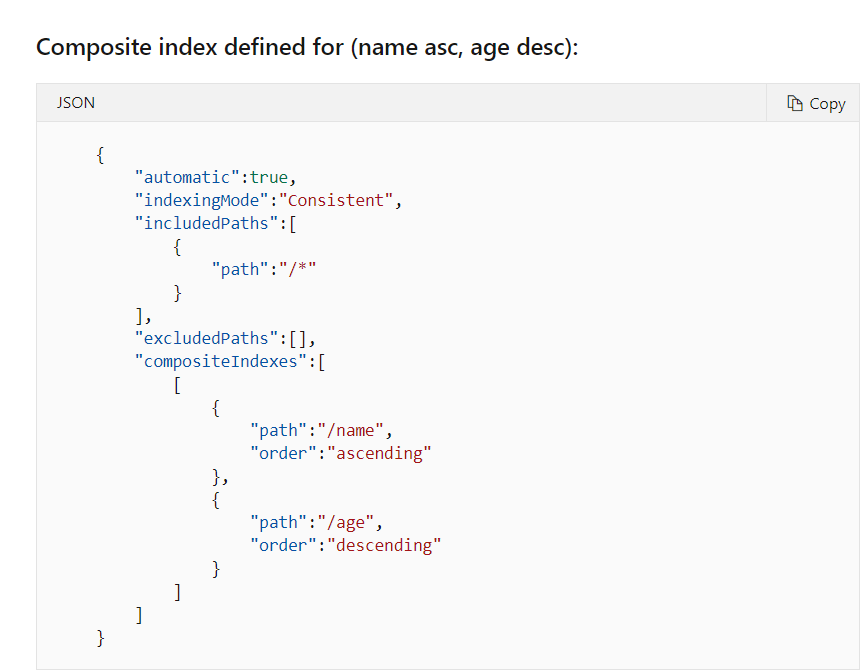

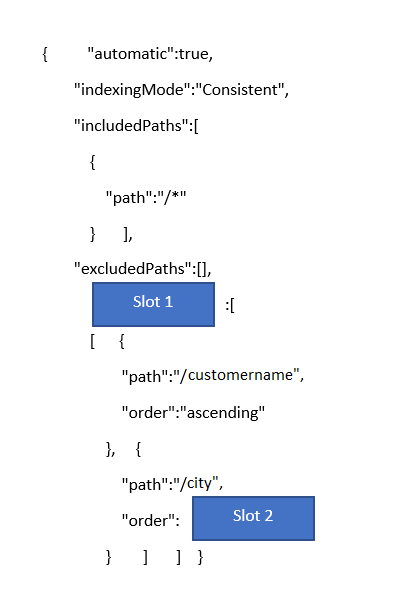

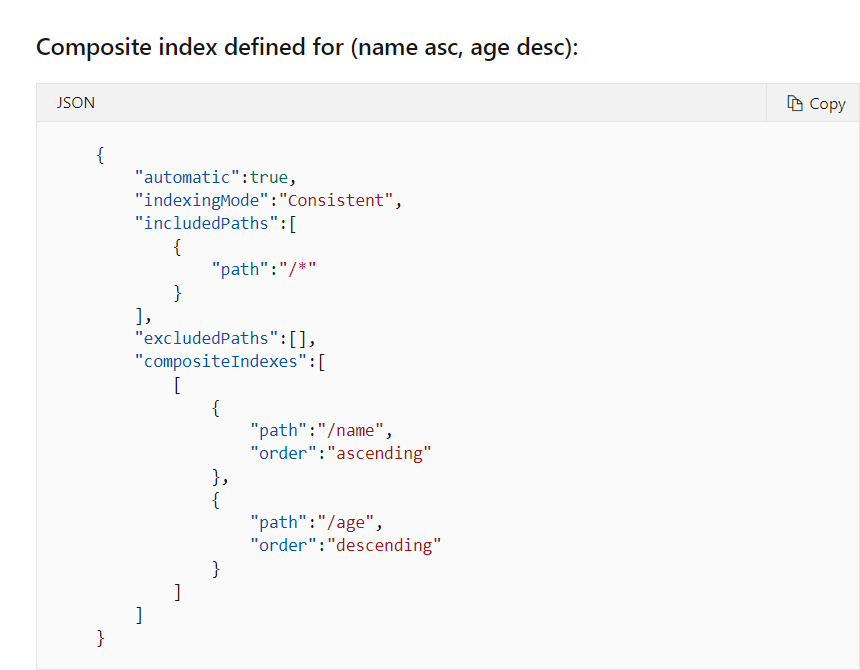

You have an Azure Cosmos DB account of the type SQL API. A sample item is given below

{

“customername” : “John”,

“city” : “New York”

}

You have to ensure the following query can be executed

select * from customers order by customername, city asc

You have to define a policy for this

Which of the following would go into Slot 1?

- A. orderBy

- B. compositeIndexes

- C. SortOrder

- D. descending

- E. ascending

Explanation:

Answer – B

If you need to order by multiple properties, you have to define a composite index

A Similar example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on indexes in Azure Cosmos DB, please refer to the following URL

You have an Azure Cosmos DB account of the type SQL API. A sample item is given below

{

“customername” : “John”,

“city” : “New York”

}

You have to ensure the following query can be executed

select * from customers order by customername, city asc

You have to define a policy for this

Which of the following would go into Slot 2?

- A. orderBy

- B. compositeIndexes

- C. SortOrder

- D. descending

- E. ascending

Explanation:

Answer – E

Here since we need to order by both properties in ascending order, we need to use the ascending keyword

A similar example of this is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on indexes in Azure Cosmos DB, please refer to the following URL

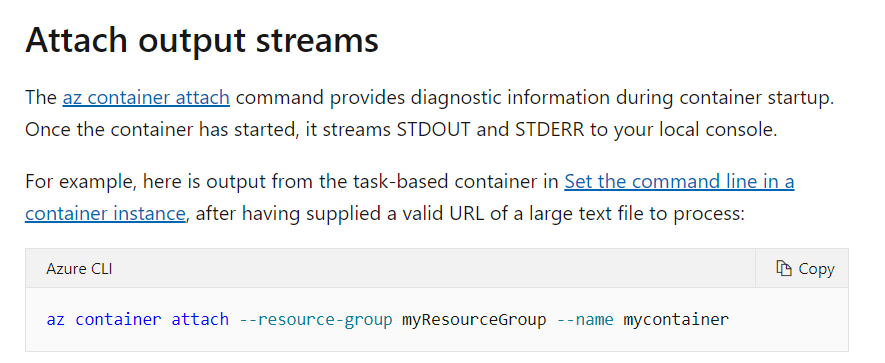

You have to view the diagnostic information about the container during the startup process. Which of the following command would you execute to get the relevant information?

- A. az web config

- B. az container attach

- C. az logs

- D. az docker logs

Explanation:

Answer – B

We can use the az container attach command for this purpose.

This is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on getting container instance logs, please refer to the following URL

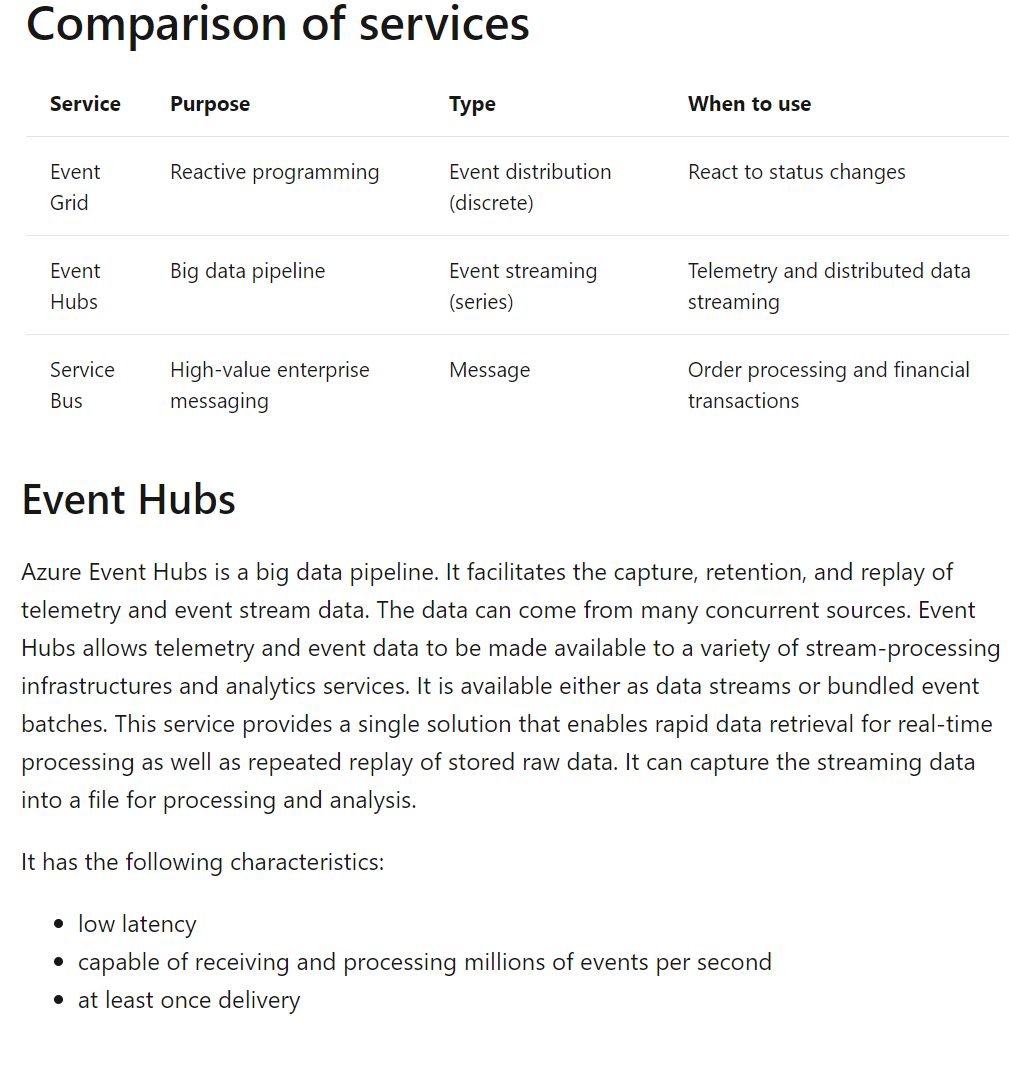

Which of the following would you use to store the end-user agreements?

- A. Azure Event Hubs

- B. Azure Event Grid

- C. Azure Service Queue

- D. Azure Table storage

Explanation:

Answer – A

What is the user's agreement?

When a user submits content, they must agree to a user agreement. The agreement allows employees of the company to review content, store cookies on user devices and track user’s IP addresses.

Based on the number of agreements generated per hour, it's best to first ingest the data into Azure Event Hubs. Once the agreements are ingested into Azure Event Hubs, store the user's agreements data into Azure Blob storage.

URL: https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-faq#general

The other services mentioned as options are not ideal to use when you have so many agreements to ingest.

Option D: table storage is a non-relational NoSQL database and can not be used to store user documents. Hence this is not the correct answer

For more information on Azure Event Hubs, please refer to the following URL

https://docs.microsoft.com/en-us/azure/event-grid/compare-messaging-services

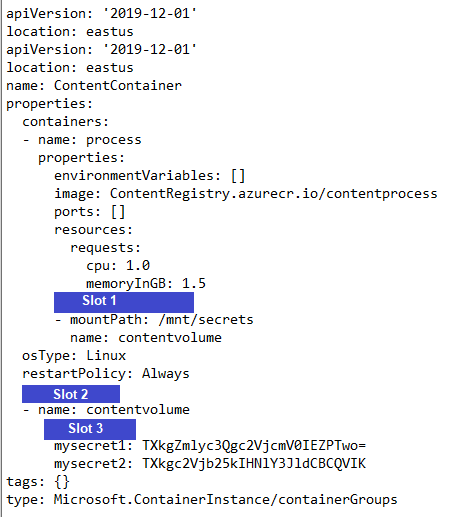

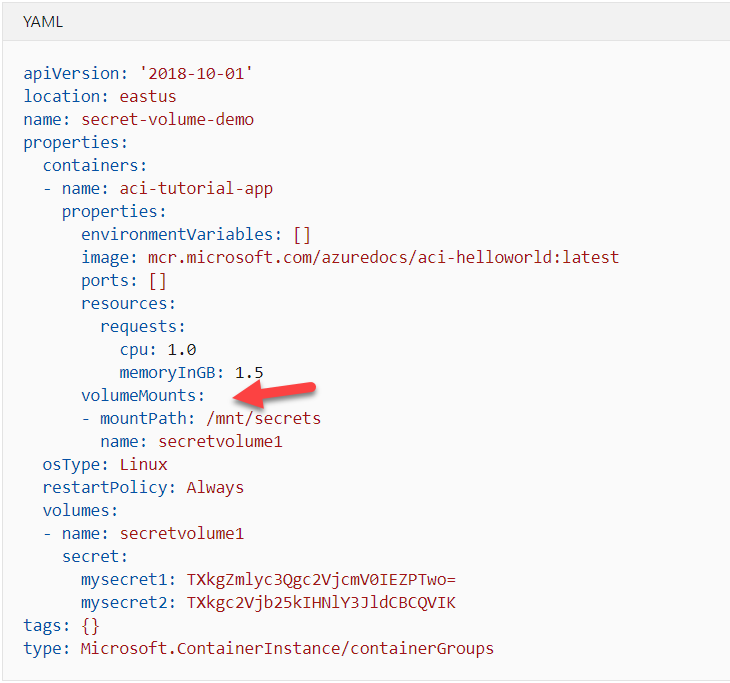

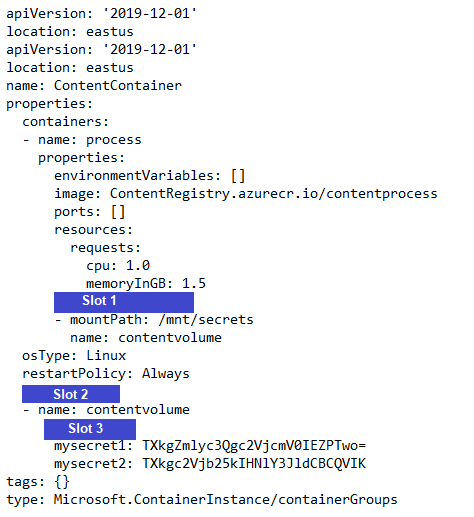

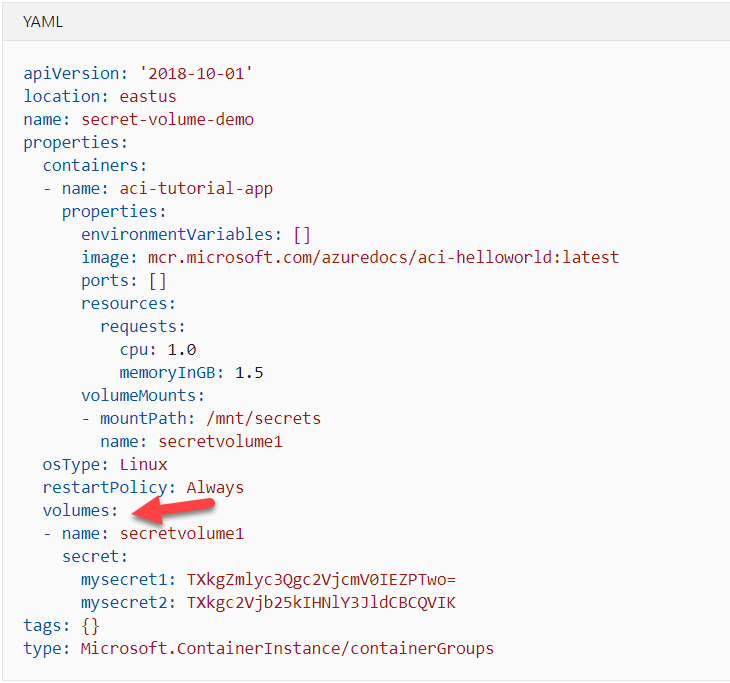

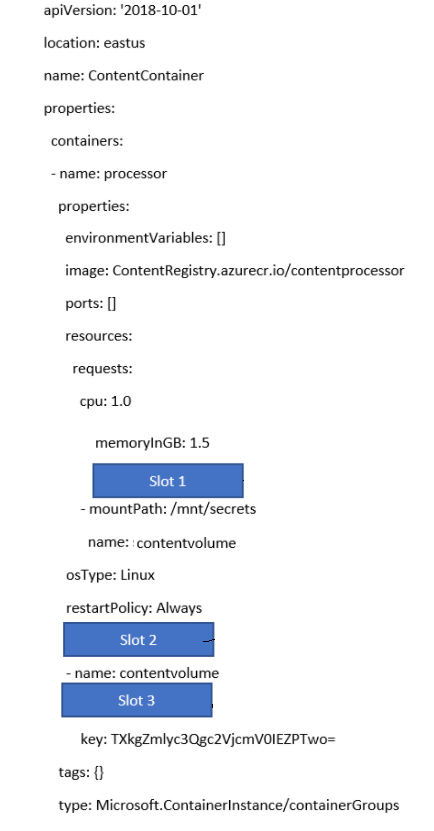

You have to comply with the security requirement to ensure that the storage keys are only available to the container only in memory. You have to complete the below YAML template for this requirement.

You have to comply with the security requirement to ensure that the storage keys are only available to the container only in memory. You have to complete the below YAML template for this requirement.

Which of the following would go into Slot 1?

- A. keyvault

- B. volumeMounts

- C. volumes

- D. secret

- E. value

Explanation:

Answer – B

Here we have to specify the volumeMounts property.

An example is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on accessing secrets from within containers, please refer to the following URL

You have to comply with the security requirement to ensure that the storage keys are only available to the container only in memory. You have to complete the below YAML template for this requirement.

Which of the following would go into Slot 2?

- A. keyvault

- B. volumeMounts

- C. volumes

- D. secret

- E. value

Explanation:

Answer – C

Here we have to mention the volumes property

An example is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on accessing secrets from within containers, please refer to the following URL

You have to comply with the security requirement to ensure that the storage keys are only available to the container only in memory. You have to complete the below YAML template for this requirement.

Which of the following would go into Slot 3?

- A. keyvault

- B. volumeMounts

- C. volumes

- D. secret

- E. value

Explanation:

Answer – D

Here we have to mention the secret property

An example is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on accessing secrets from within containers, please refer to the following URL

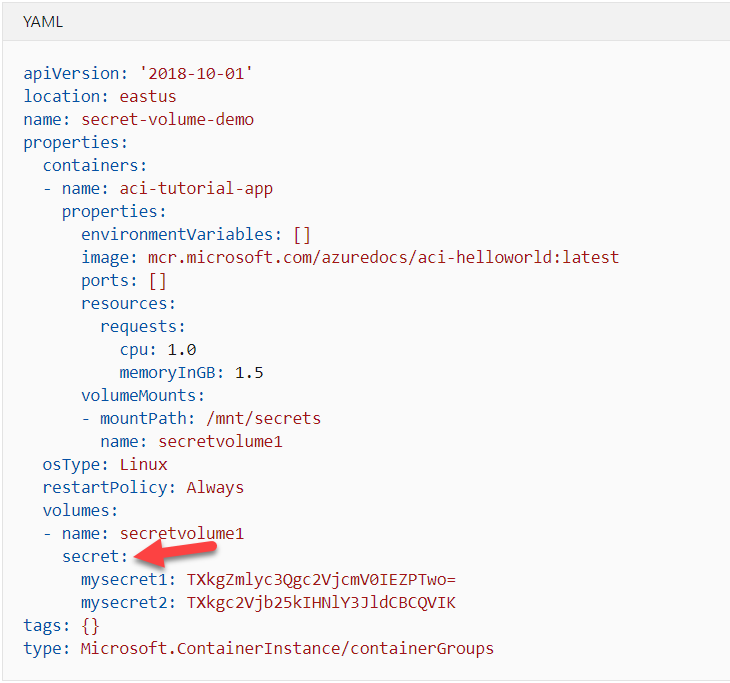

You have an Azure storage account. You have to fetch and set the Metadata for the blobs in the storage account via a .Net module.

You issue the following methods for setting the metadata

GetMetadata();

Metadata.Add();

Is this the right sequence of steps?

- A. Yes

- B. No

Explanation:

Answer – B

To fetch the properties of a Blob , you need to use the FetchAttributesAsync() method.

And then to set the metadata properties, you have to issue the Metadata.Add() and SetMetadataAsync() method.

For more information on working with properties and metadata from .Net, please refer to the following URL

You have an Azure storage account. You have to fetch and set the Metadata for the blobs in the storage account via a .Net module.

You issue the following methods for setting the metadata

FetchAttributesAsync();

Metadata.Add();

SetMetadataAsync();

Is this the right sequence of steps based on the new version .NET v12 SDK?

- A. Yes

- B. No

Explanation:

Answer – B

No, this is not the right sequence of steps.

The correct steps are:

GetPropertiesAsync();

Metadata.Add();

SetMetadataAsync();

The Microsoft documentation mentions the following

For more information on working with properties and metadata from .Net, please refer to the following URL

You have an Azure storage account. You have to fetch and set the Metadata for the blobs in the storage account via a .Net module.

You issue the following methods for setting the metadata

GetMetadata();

Metadata.Add();

SetMetadataAsync();

Is this the right sequence of steps?

- A. Yes

- B. No

Explanation:

Answer – B

Here the requirement is "fetch and set metadata"

To retrieve metadata, call the GetProperties or GetPropertiesAsync method on your blob or container to populate the Metadata collection, then read the values.

To set metadata, add name-value pairs to the Metadata collection on the resource. Then, call one of the following methods to write the values:

SetMetadata

SetMetadataAsync

For more information on working with properties and metadata from .Net, please refer to the following URL

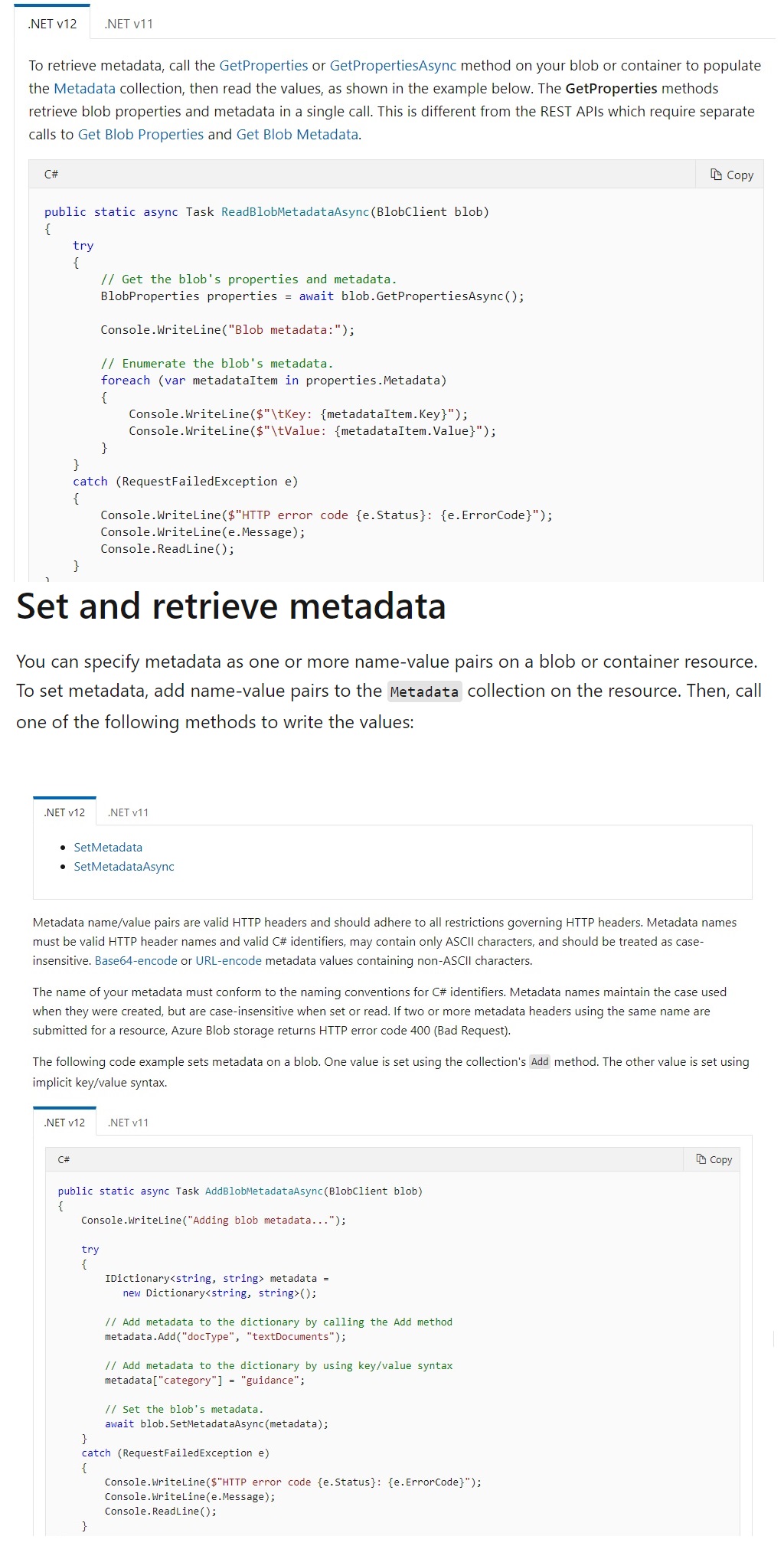

Your company has to develop a solution that needs to make use of a messaging service in Azure. The messaging system needs to have the following properties

- Provide transactional support

- Support duplicate detection of messages

- Messages should never expire

Which of the followings 2 options are fullfilling the requirements?

- A. Azure Event Hubs

- B. Azure Service Bus Queue

- C. Azure Service Bus topic

- D. Azure Storage Queues

Explanation:

Answer – B and C

When you create an Azure Service Bus queue or topic, you can literally mention years and years for the messages to remain in either the queue or topic.

Options A and D are incorrect since here the messages have a maximum retention of 7 days.

For more information on message expiration for Azure Service Bus, please refer to the following URL

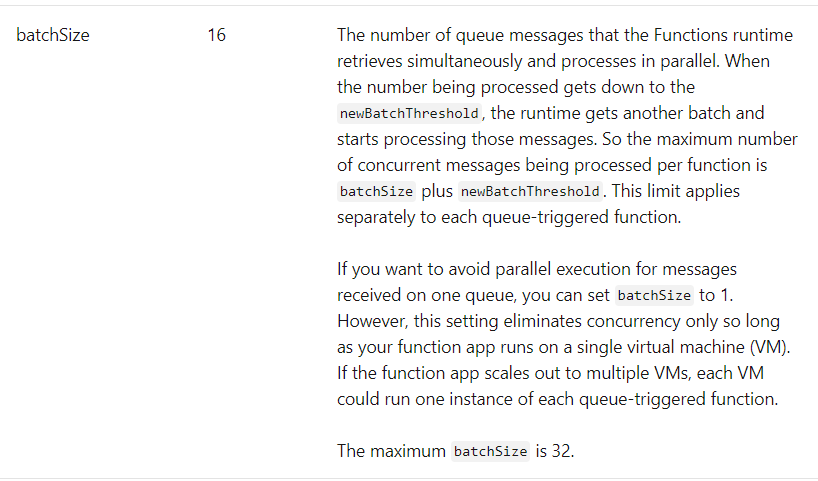

You currently have an Azure Function defined as part of your Azure subscription. The Function takes messages from an Azure Storage Queue and stores the messages in an Azure SQL Database.

At times, the Azure Function errors out with the following error message

Timeout expired. The timeout period elapsed prior to obtaining a connection from the pool. This may have occurred because all pooled connections were in use and max pool size was reached.

Which of the following can be done to resolve this issue?

- A. Edit the host.json file and change the batchSize property

- B. Edit the function.json file and change the queueMax property

- C. Change the trigger type to a Timer trigger

- D. Change the App Service Plan to a Premium App Service Plan

Explanation:

Answer – A

Here the issue could be that the Azure Function is executing in parallel and taking up all the connections in the connection pool. You can either increase the connection pool property on the database side or change the host.json file and edit the batchSize property which tells how many parallel executions should be allowed for the functions defined in the function app.

The Microsoft documentation mentions the following on the property

Since this is the ideal logical step to take, all other options are incorrect

For more information on the function bindings for storage queues, please refer to the following URL

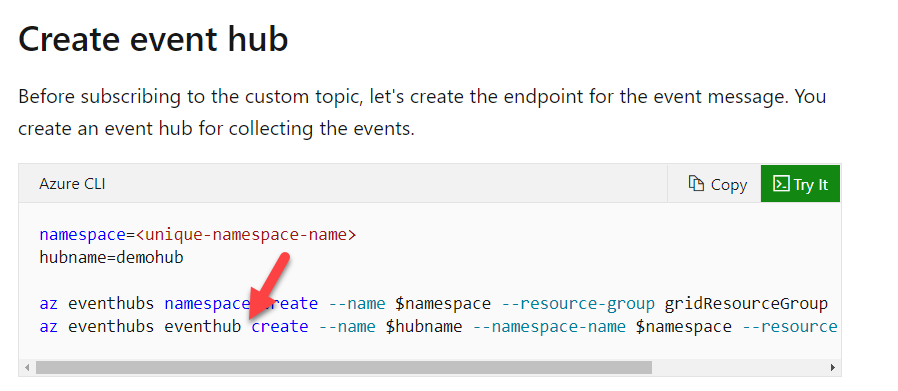

You have to develop a solution which would perform the following operations

- Configure events to be send to an Azure Hub instance

- The events would be sent via the Event Grid service

You have to complete the below Azure CLI script for this requirement

Which of the following would go into Area 1?

- A. eventgrid

- B. eventhub

- C. subscription

- D. topic

Explanation:

Answer – B

Here we need to use the eventhub keyword to create an event hub

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating events for the event hub service, please refer to the following URL

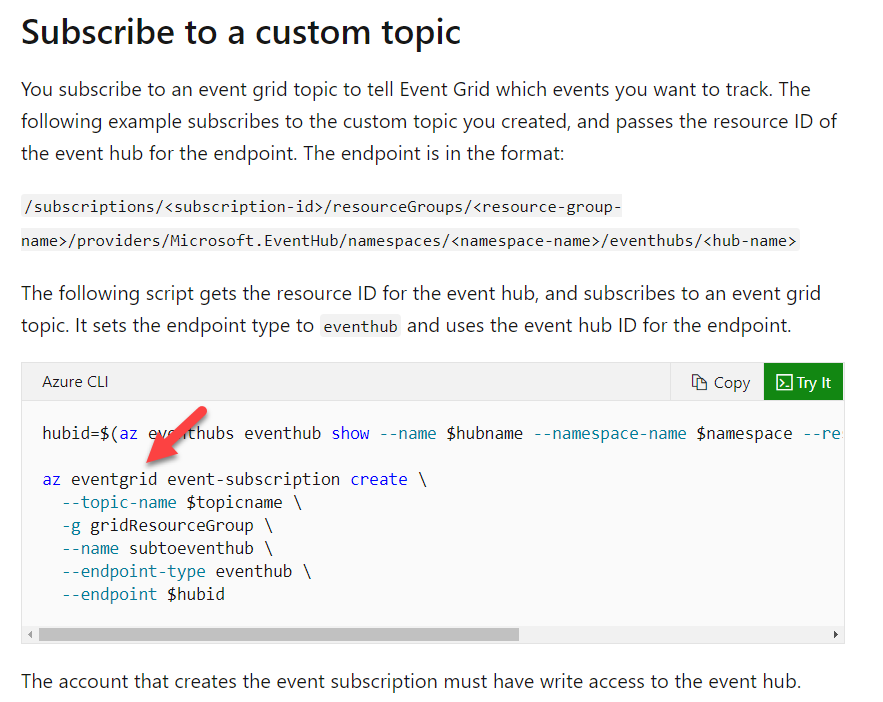

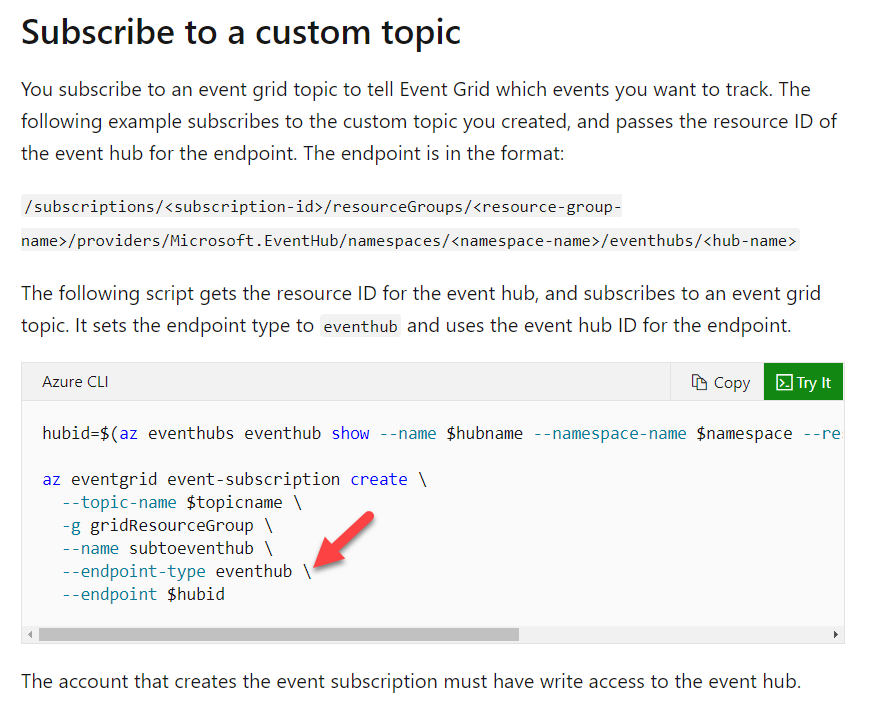

You have to develop a solution which would perform the following operations

- Configure events to be send to an Azure Hub instance

- The events would be sent via the Event Grid service

You have to complete the below Azure CLI script for this requirement

Which of the following would go into Area 2?

- A. eventgrid

- B. eventhub

- C. subscription

- D. topic

Explanation:

Answer - A

Here we need to use the eventgrid keyword to create a subscription onto Azure Event Grid

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating events for the event hub service, please refer to the following URL

You have to develop a solution which would perform the following operations

- Configure events to be send to an Azure Hub instance

- The events would be sent via the Event Grid service

You have to complete the below Azure CLI script for this requirement

Which of the following would go into Area 3?

- A. eventgrid

- B. eventhub

- C. subscription

- D. topic

Explanation:

Answer – B

Here we need to use the eventhub keyword as the endpoint type

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating events for the event hub service, please refer to the following URL

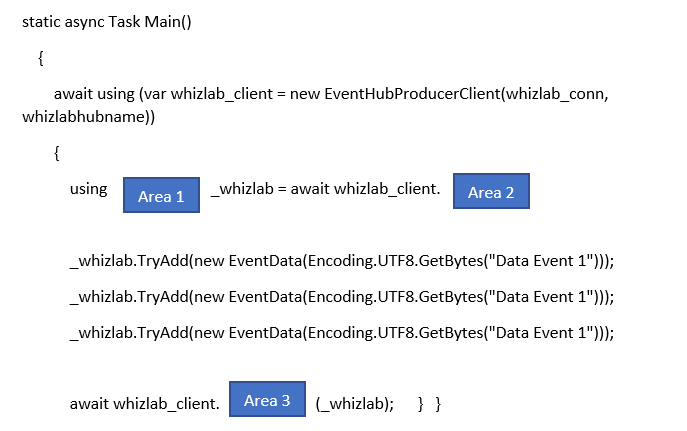

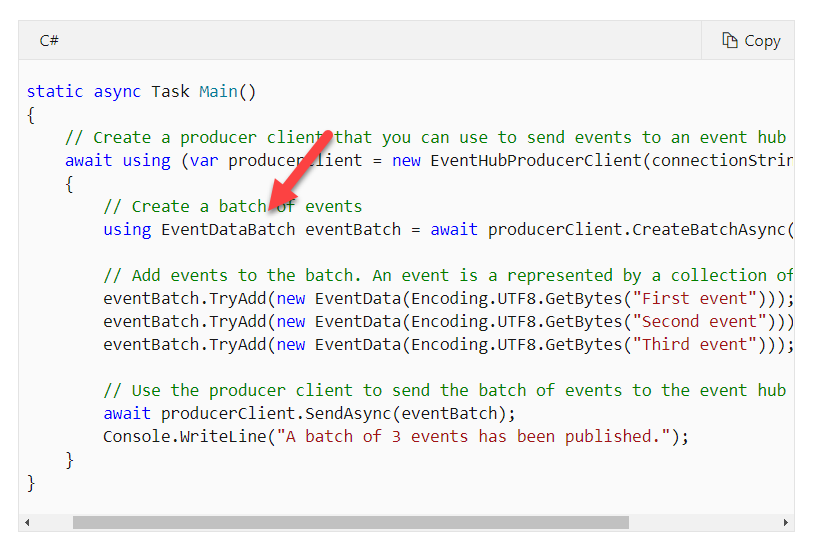

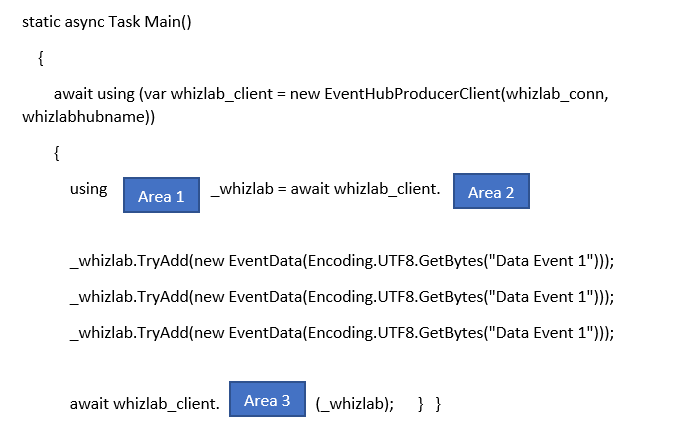

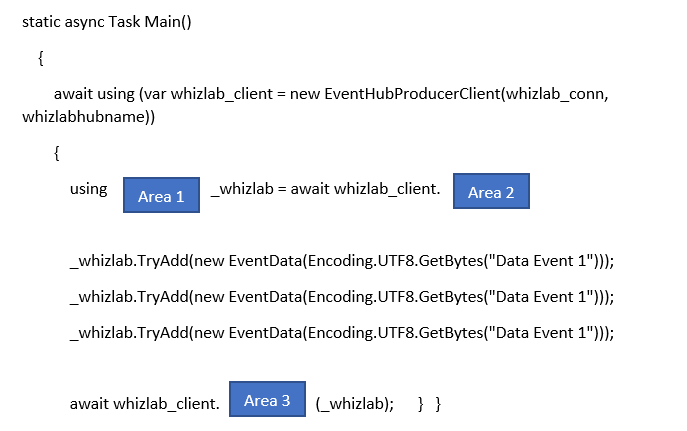

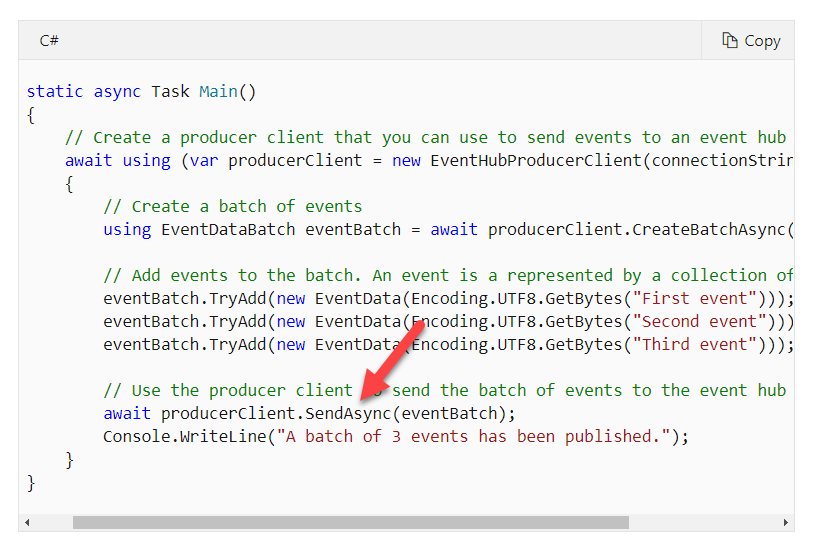

You have to develop an application which would be used to interact with Azure Event Hubs. You have created an Azure Event Hub namespace and the Event Hub itself. You now have to develop the required module to send messages to the Event Hub.

Which of the following would go into Area 1?

- A. SendAsync

- B. EventData

- C. EventDataBatch

- D. CreateBatchAsync

Explanation:

Answer – C

Here we have to create an object of the class type EventDataBatch

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on send events to Azure Event Hub, please refer to the following URL

You have to develop an application which would be used to interact with Azure Event Hubs. You have created an Azure Event Hub namespace and the Event Hub itself. You now have to develop the required module to send messages to the Event Hub.

Which of the following would go into Area 2?

- A. SendAsync

- B. EventData

- C. EventDataBatch

- D. CreateBatchAsync

Explanation:

Answer - D

Here we have to invoke the method of CreateBatchAsync

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on send events to Azure Event Hub, please refer to the following URL

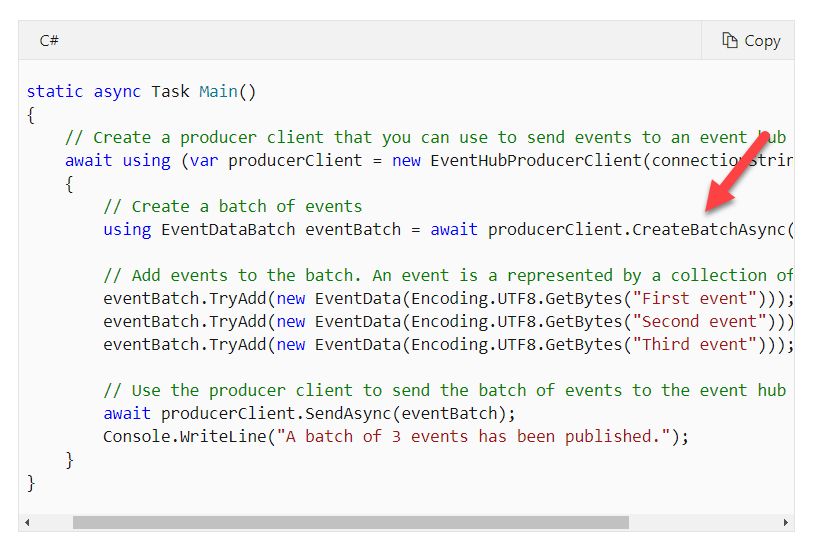

You have to develop an application which would be used to interact with Azure Event Hubs. You have created an Azure Event Hub namespace and the Event Hub itself. You now have to develop the required module to send messages to the Event Hub.

Which of the following would go into Area 3?

- A. SendAsync

- B. EventData

- C. EventDataBatch

- D. CreateBatchAsync

Explanation:

Answer – A

Here we invoke the method of SendAsync to send the messages to the event hub

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on send events to Azure Event Hub, please refer to the following URL